How to use the Pirate Framework to standardize company metrics

As M1 grew over the last few years, measuring the impact of our teams’ efforts became increasingly tough. This wasn’t because we had too little data available or not enough access to charts and graphs. Instead, we expanded into more products across multiple finance verticals that all have different definitions and speak different languages.

To understand the health of our products in relation to one another, we needed to speak the same language. We needed a standardized metrics framework to understand, discuss, and measure the entire user lifecycle consistently across all our products. So, we started using the Pirate framework.

What is the Pirate Framework?

The Pirate Framework is a growth hacking framework that measures fast-paced and sustainable product-led growth through a complete user lifecycle funnel. It’s often referred to as AARRR because the framework constitutes of the following metrics: Acquisition, Activation, Retention, Referrals, and Revenue.

What are pirates most known for? AARRR.

Here’s how we define each metric:

- Acquisition – When a user does the minimum amount necessary to become a customer.

- Activation – When a user goes from acquired to the minimum amount necessary to “use” the product.

- Retention – When a user goes below the minimum amount necessary for 30 consecutive days is deactivated.

- Referral – When a user refers another user, who then activates the product.

- Revenue – A user’s generated revenue and gross margin from product activity.

We made slight additions to fit our business needs at M1, making our framework AAARRRRS:

- Activity – A user’s level of engagement with a product (measurement varies by product).

- Rescue – When a user who was previously deactivated reengages with the minimum amount necessary.

- Satisfaction – A user’s NPS score.

How we use the Pirate Framework

First, we defined each AAARRRRS metric consistently across all products.

An activated user for one product must be the same across all other products. For example, an activated user for M1 Invest is a user that has added money to their account and holds a balance greater than $0. Similarly, an activated user for M1 Borrow is a user who borrows more than $0.

These metrics represent L1 metrics—high-level user lifecycle metrics that look at the first user touchpoint up until that user generates income for the company. These are tied directly to business outcomes and used in measurement of company objectives and key results (OKRs), which gives clear insight into where the lifecycle is performing well or underperforming.

For example, if our Marketing team runs a campaign to drive more users onto the platform, they will look at Acquisition to assess the impact. If our Client Development team wants to focus on keeping clients, they will look at Retention across all products to know where to focus. If Product wants to focus on initiatives driving more income, they will consider Revenue for each product.

Each of these L1 metrics can be broken down into L2 metrics, which help our team better understand what’s driving trends in L1 metrics.

Why does this matter?

In the early days at M1, Invest was our main product and “funded user” was one of our most important metrics. We wanted to know how many new customers actually “used” our platform. At the time, the threshold was >$25.

As we launched new products, we still wanted to know about product usage. But “usage” for one product may be different for another. This created a few challenges:

- How do you have x-product discussions about performance or its users? With differing definitions of the user lifecycle, we’d see over-repetition of what it means to have a type of user for one product versus another.

- How do you establish consistent goals across the company? It became more difficult to align our focus as a company, as each team would think about its users, engagement, and success uniquely

- How do you efficiently develop metrics for new product lines? The Pirate Framework allows pieces of work to be cut and paste, creating time to solve more unique problems with products.

We kept these challenges in mind as we designed, deployed, and productized the Pirate Framework.

Design

Database design is a critical aspect to making any standardized framework successful because it impacts its usability and efficiency. The data for Pirate Framework needed to be accessible for all data users and well maintained over time. Also, the underlying logic had to be consistent across all product areas.

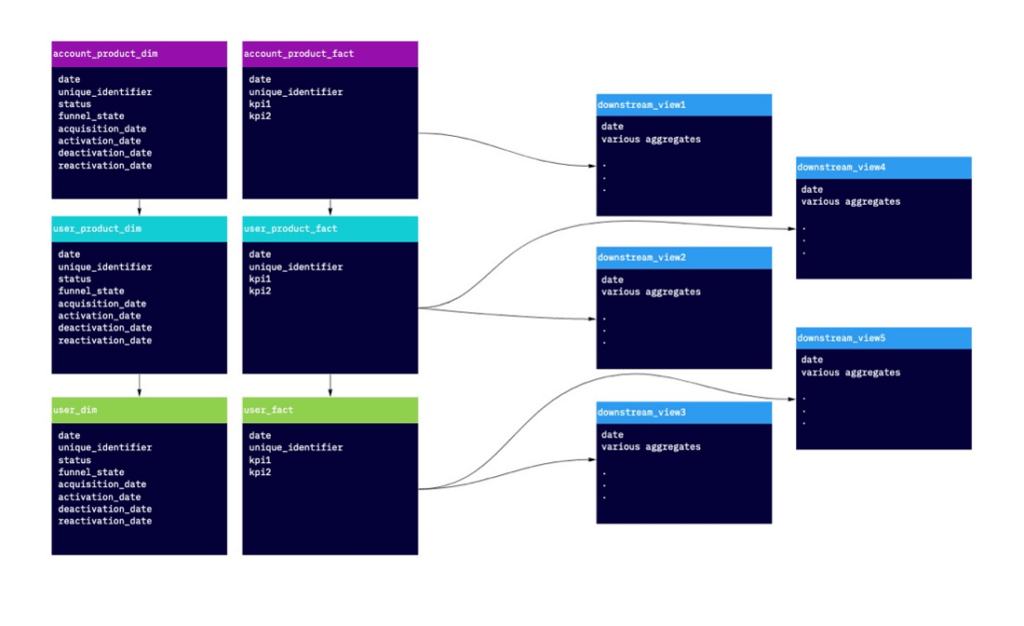

We started by drafting a uniform data model, building up the normalized table structure that already existed in our data warehouse. The table design is a star schema with a table at different levels of granularity across M1 products with appropriate unique and foreign keys and attributes named using business-friendly language.

This ensured everyone could understand the data regardless of skill level. And it would further deepen analysts’ understanding of the normalized table structure.

After setting up the table design, we made sure to stay consistent with how we define each dimension and calculate measures. For example, the way we determine a user is no longer retained on M1 Invest (i.e. has ‘deactivated’) is if they have $0 balance for 30 consecutive days. This same base definition applies to M1 Borrow, where the user has ‘deactivated’ if there is a $0 balance for 30 consecutive days. So the logic for both calculations should look very similar.

Deciding on a 30-day buffer was crucial. We wanted to insulate the metric against transactional volatility. People move money around from time-to-time, and we would get a lot of false positives if a user was at $0 for just a day or a week. On the flip-side, a metric with too much of a lag would mean we wouldn’t be able to respond to changes fast enough.

Although we decided on 30 days, our design means let us start counting on Day 1. This means it’s simple to trigger a marketing email for someone who’s about to become inactive at Day 25.

But achieving this level of consistency is easier said than done. The SQL code for the entire M1 platform added up to many thousands of lines of code, spanning over seventy-five files, referencing dozens of raw input tables, and enforcing standards at that scale proved to be challenging. We solved this problem by adopting code quality standards and best practices typically used by software engineers, including:

- The minimum requirements for documentation

- Minimum testing requirements before submitting code

- Formatting standards for writing SQL

But for a project this large, setting these kinds of standards isn’t straightforward. Instead, the standards themselves must be built into automated processes for consistent enforcement, while maintaining enough real-time feedback for the analysts to remain productive. Because the success of any new initiative is all in the execution, it was crucial to keep these principles in mind for the development of technical architecture and deployment of the Pirate Framework.

Deploy

Managing data at M1 is all about scale. We oversee many terabytes of data, hundreds of daily batch jobs, and support every team within the company. This kind of sprawl is only manageable with state-of-the-art tools and frameworks, as well as a passion for automating every routine process.

One of the most critical tools in M1’s entire ecosystem is an open-source tool called dbt (data build tool). This tool lets data analysts and data engineers collaborate on data modeling and query patterns. It allows an analyst to write mostly ordinary SQL, while also using the version-control tools that engineers use for code review, change management, backups, and recovery.

For the Pirate Framework, we integrated with dbt in several ways:

- The inputs to the Pirate Framework models were the “data core” of fine-grained entity-level data that’s maintained for all of M1.

- The Pirate Framework logic itself was written (mostly by analysts) in dbt/SQL, committed to git, and reviewed in Github.

- The output and results of the Pirate Framework are themselves a dbt model used downstream by more models, reports, and dashboards.

Using dbt also let us add additional testing to the code base to help with data quality. The dbt data catalog provided added transparency into the logic to help explain governed definitions. This avoided misuse of the vast amount of data points generated when building out the Pirate Framework metrics.

Reviewing all the SQL code to ensure it runs efficiently on our Amazon Redshift cluster was another critical step. Once again, dbt is critical for solving the kinds of performance problems that can arise with complex business logic. For example, we might identify a particularly slow query and develop a strategy to “materialize” the results ahead of time on a daily schedule. In practice, going from a prototype to a full, production-level query can take weeks of iteration.

The data build tool also helped us calculate the L1 metric, Retention, which required looking at each user’s prior 30-day balance history to determine if they had > $0. This is computationally expensive in SQL and doesn’t scale well, especially when done across all users for all products.

To solve this, we used dbt’s snapshot functionality to identify state changes. In our case, this meant a record is inserted for each user each time they cross the $0 threshold and updates to prior records through effective to and from dates. This allowed us to use the time between state changes to determine a user’s deactivation.

After overcoming these hurdles and laying a core foundation based on consistency and reliability, we built downstream Tableau data extracts. These were views derived from the metrics designed and deployed to the common tables and necessary to build the appropriate dashboards that brought the Pirate Framework to life.

Productize

The Pirate Framework has been a significant undertaking for our Analytics and Data Engineering teams. But it laid the groundwork for creating dashboards, building a strong data culture, and proliferating access to data throughout M1.

Our goal was to build dashboards that anyone at M1 can use to understand the current health and overall trends of the business. But first, we had to change the data culture. How do you change the way people talk about data?

We had to start by building a great dashboard that applied key product design principles.

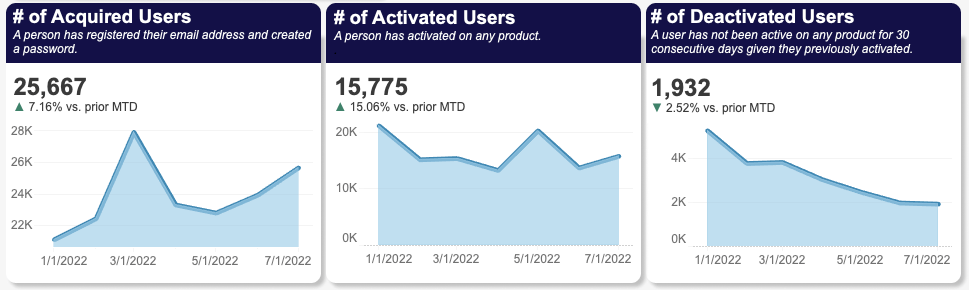

- Intuitive UI – Clear headers, metric value, and charts are at the core of Pirate Framework dashboards. This keeps the end-user focused on the data.

- Simple and easy to understand – Data is categorized and organized appropriately to help guide the end-user to exactly what they are looking for.

- Selecting the right visualization – For the first round of dashboards, we primarily used time-series graphs to help the end-user understand overall health and trends.

It’s important to recognize the increased efficiency created by our standardized framework in fulfilling analytics requests and self-service capabilities. Having a consistent table structure across products makes it easier for data users, including our own analytics teams, to write queries and build dashboards. The Data team also benefits from scalability because we’re better positioned to deliver reporting as M1 forms new business units and launches new products.

Throughout our journey, we continued to socialize and seek alignment with our stakeholders on definitions, data design, and visualizations. After building our MVP, we conducted roadshows with each department to demo our work and answer questions. We then presented the Pirate Framework and its output to the entire company. Now, we consistently share updates to public Slack channels and iterate. It takes anywhere from 7-12 touchpoints for information to register for an end-user—and we’re taking that maxim as gospel.

So what’s next?

The Pirate Framework has generated significant attention since its initial launch. After all, we laid the groundwork for analytics going forward. Keeping one of M1’s core values, Transparency, in mind, we’re on a mission to create new dashboards and analyses that deliver deeper insights. Having access to data allows our teams to ask better business questions, prioritize more effectively, and simply do better work.

- Categories

- M1